Intro to Regression: Part 5: Interpreting coefficients, centering predictor variables

Let's turn again to the mtcars dataset in R and conduct another simple linear regression. This time we'll regress the response variable, mpg, with horsepower (hp) as the predictor variable.

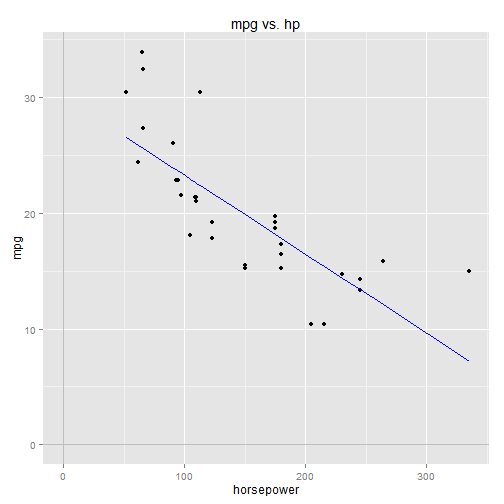

First things first, let's quickly explore the relationship between the two variables using a scatterplot:

library(ggplot2)

library(grid)

data(mtcars)

qplot(x=hp, y=mpg, data=mtcars) +

ggtitle("mpg vs. hp") +

xlab("horsepower") +

geom_vline(x=0, colour="gray") +

geom_hline(y=0, colour="gray")As with mpg vs. weight, there's a clear negative correlation between mpg and horsepower.

Building a linear model in R

In R, we use the lm function to build a linear model:

model <- lm(mpg ~ hp, data=mtcars)- mpg is the response variable

- hp is the predictor variable

- \(\beta_0\) is the Y-intercept

- \(\beta_1\) is the slope

- \(\epsilon\) is the error term (the residuals)

summary(model)

##

## Call:

## lm(formula = mpg ~ hp, data = mtcars)

##

## Residuals:

## Min 1Q Median 3Q Max

## -5.7121 -2.1122 -0.8854 1.5819 8.2360

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 30.09886 1.63392 18.421 < 2e-16 ***

## hp -0.06823 0.01012 -6.742 1.79e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 3.863 on 30 degrees of freedom

## Multiple R-squared: 0.6024, Adjusted R-squared: 0.5892

## F-statistic: 45.46 on 1 and 30 DF, p-value: 1.788e-07- \(\beta_0\) = 30.09886, the Estimate for the (Intercept) coefficient

- \(\beta_1\) = -0.06823, the Estimate for the hp coefficient

which we graph below:

B0 = 30.09886

B1 = -0.06823

mpg.predicted <- B0 + B1 * mtcars$hp

qplot(x=hp, y=mpg, data=mtcars) +

ggtitle("mpg vs. hp") +

xlab("horsepower") +

geom_line(mapping=aes(x=hp,y=mpg.predicted), colour="blue") +

geom_vline(x=0, colour="gray") +

geom_hline(y=0, colour="gray")The regression line cuts right thru the data, just as we'd expect.

Interpreting the coefficients

So \(\beta_0\) = 30.09886 can be interpreted as the predicted mpg for a car with zero horsepower. But zero horsepower is kind of meaningless in this context (is there such thing as a zero-horsepower car?). This makes \(\beta_0\) difficult to interpret practically. In the next section we'll look at ways to get a more meaningful interpretation out of \(\beta_0\).

\(\beta_1\) = -0.06823, correlates changes in horsepower with changes in mpg. Geometrically speaking it's the slope of the regression line, and we all know that slope is equal to change-in-Y divided by change-in-X. \(\beta_1\) therefore is equal to the change-in-mpg divided by the change-in-hp.

Another way to put it: \(\beta_1\) is equal to the change in mpg per 1-unit change in horsepower. So a 1-unit increase in horsepower corresponds to 0.06823 decrease in predicted mpg.

Centering the predictor variable

hp.center <- with(mtcars, hp-mean(hp))

qplot(x=hp.center, y=mpg, data=mtcars) +

ggtitle("mpg vs. hp (centered) ") +

xlab("horsepower (+147, centered) ") +

stat_smooth(method="lm", se=FALSE, size=1) +

geom_vline(x=0, colour="gray") +

geom_hline(y=0, colour="gray")The regression function is now: $$ mpg = \beta_0 + \beta_1 (hp - mean(hp)) $$ The B1 term goes to 0 when hp = mean(hp): $$ \begin{align*} mpg &= \beta_0 + \beta_1 (mean(hp) - mean(hp)) \\[8pt] mpg &= \beta_0 \end{align*} $$ So \(\beta_0\) can now be interpreted as the predicted mpg for a car with average horsepower (hp=mean(hp)=147). This is a more practical interpretation of mpg than that of a zero-horsepower car.

We can build a model in R using the shifted horsepower data:

model <- lm(mpg ~ I(hp-mean(hp)), data=mtcars)

summary(model)

##

## Call:

## lm(formula = mpg ~ I(hp - mean(hp)), data = mtcars)

##

## Residuals:

## Min 1Q Median 3Q Max

## -5.7121 -2.1122 -0.8854 1.5819 8.2360

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 20.09062 0.68288 29.420 < 2e-16 ***

## I(hp - mean(hp)) -0.06823 0.01012 -6.742 1.79e-07 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 3.863 on 30 degrees of freedom

## Multiple R-squared: 0.6024, Adjusted R-squared: 0.5892

## F-statistic: 45.46 on 1 and 30 DF, p-value: 1.788e-07\(\beta_0\) = 20.09062, which we now interpret to be the predicted mpg for a car with average horsepower (hp=147).

\(\beta_1\) = -0.06823. Notice that it is unchanged from the un-shifted model, as we should expect, since it correlates changes in horsepower with changes in mpg, which is unaffected by shifting the data.